Subspace-Configurable Networks

2Complexity Science Hub Vienna, Austria

3University of Macao, China

4ETH Zurich, Switzerland

*Equal contribution

Abstract

While the deployment of deep learning models on edge devices is increasing, these models often lack robustness when faced with dynamic changes in sensed data. This can be attributed to sensor drift, or variations in the data compared to what was used during offline training due to factors such as specific sensor placement or naturally changing sensing conditions.

Hence, achieving the desired robustness necessitates the utilization of either an invariant architecture or specialized training approaches, like data augmentation techniques. Alternatively, input transformations can be treated as a domain shift problem, and solved by post-deployment model adaptation.

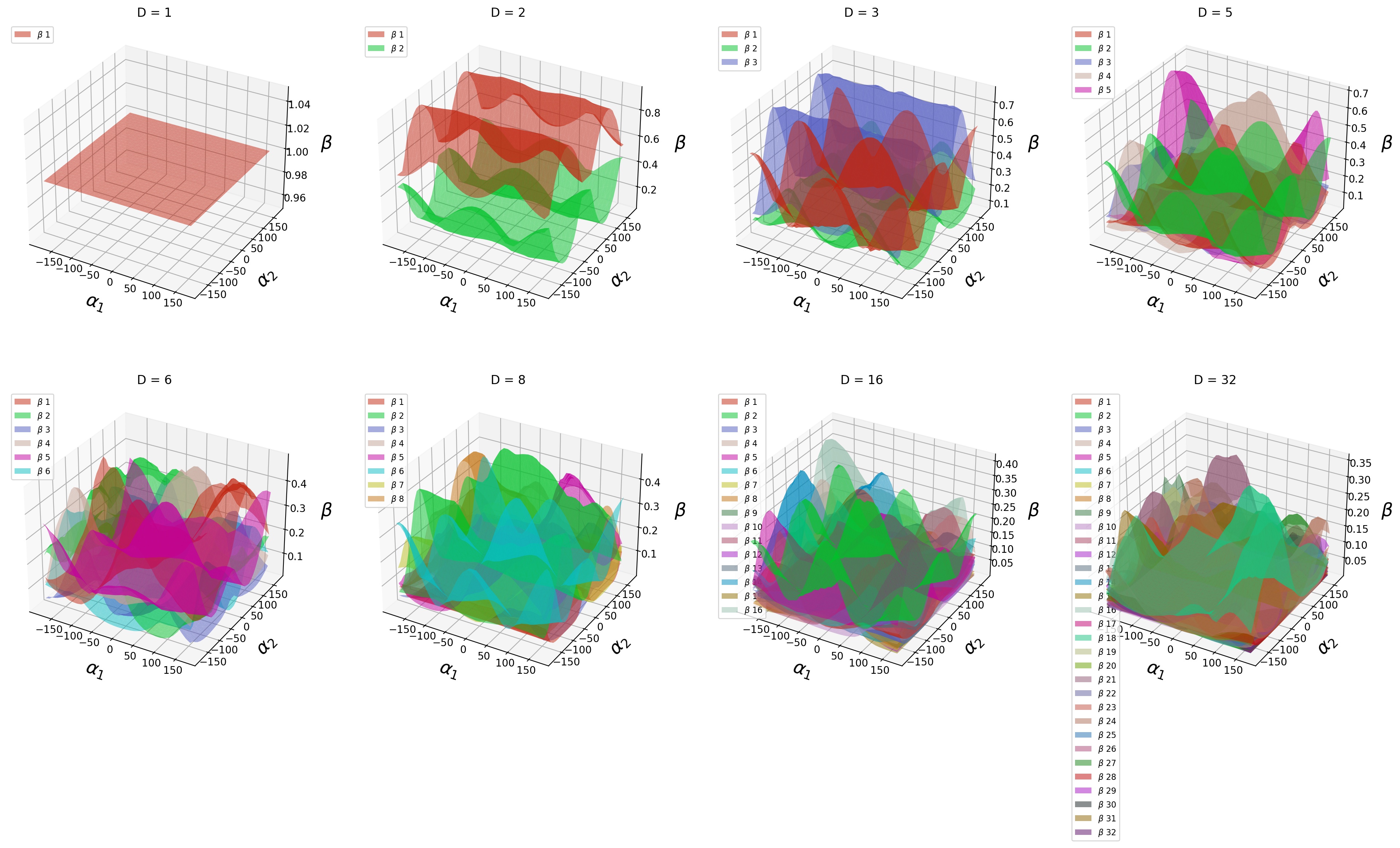

In this paper, we train a parameterized subspace of configurable networks, where an optimal network for a particular parameter setting is part of this subspace. The obtained subspace is low-dimensional and has a surprisingly simple structure even for complex, non-invertible transformations of the input, leading to an exceptionally high efficiency of subspace-configurable networks (SCNs) when limited storage and computing resources are at stake.

SCN's Performance on Rotation-MLP-MNIST

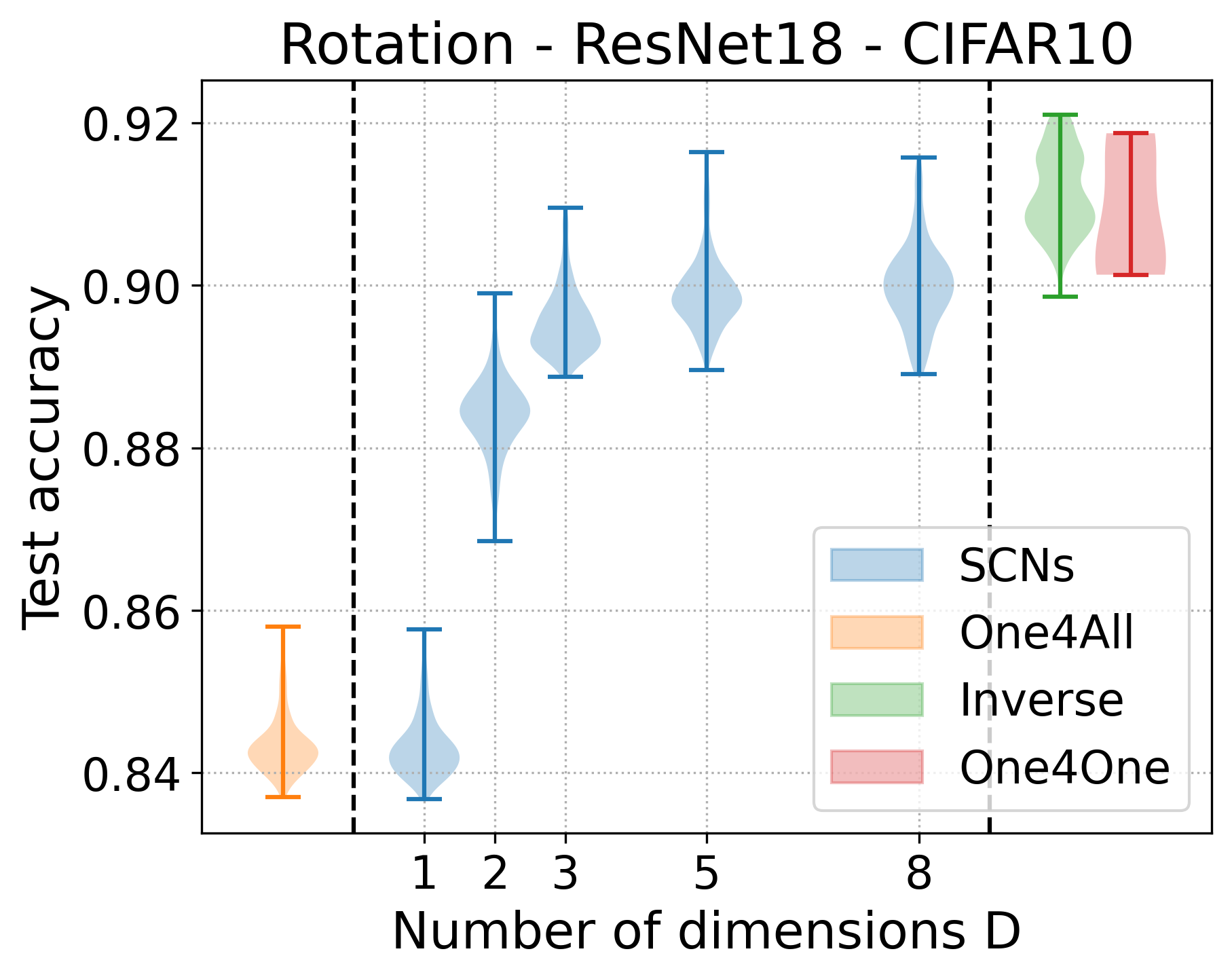

SCN's Performance on Rotation-ResNet18-CIFAR10

SCNs achieve high test accuracy already for low D

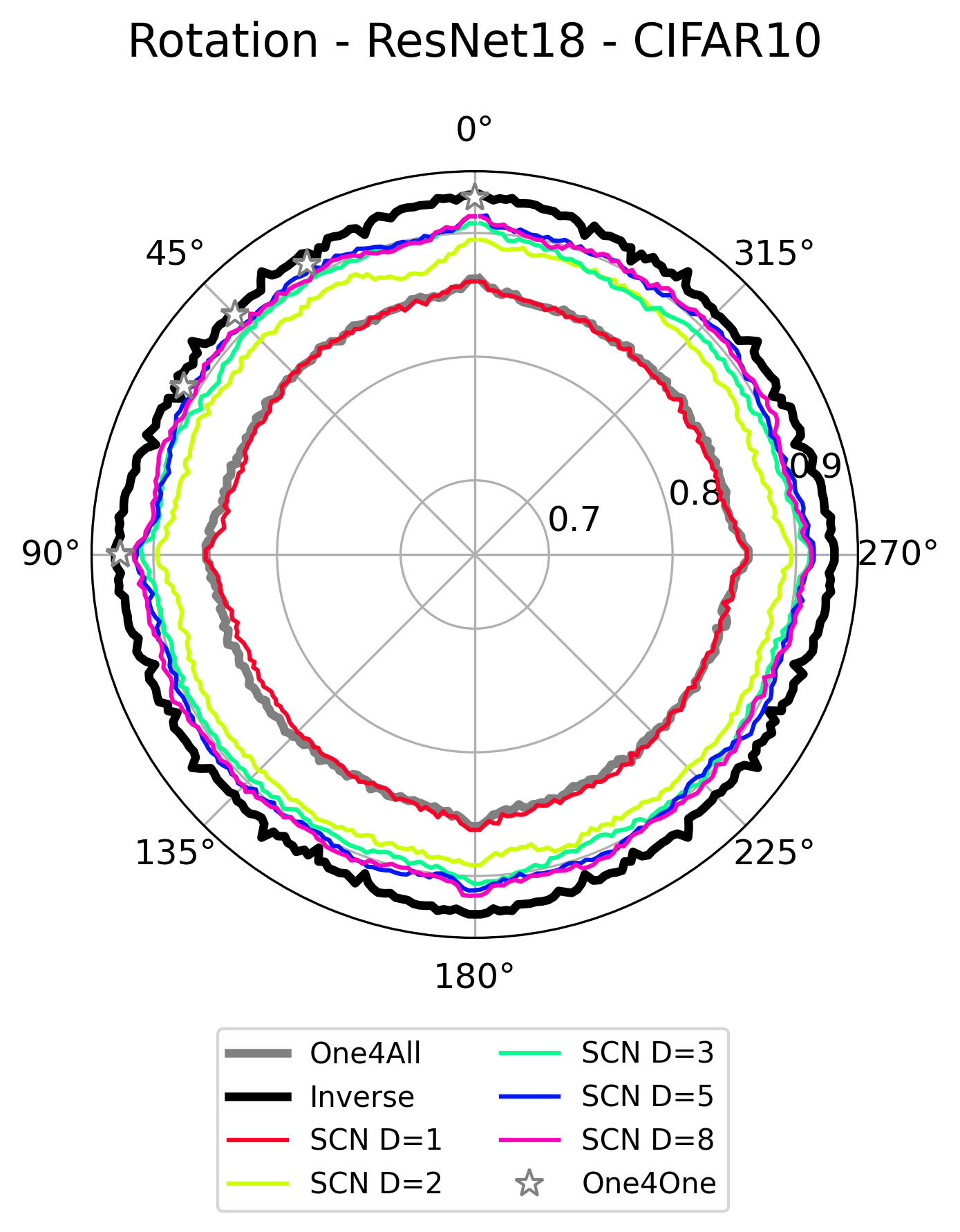

SCN test set accuracy on 2D rotations.

Low-dimensional

Play SCN's beta-subspace

See the interactive SCN's beta-subspace visualization page for all experiments in the paper.

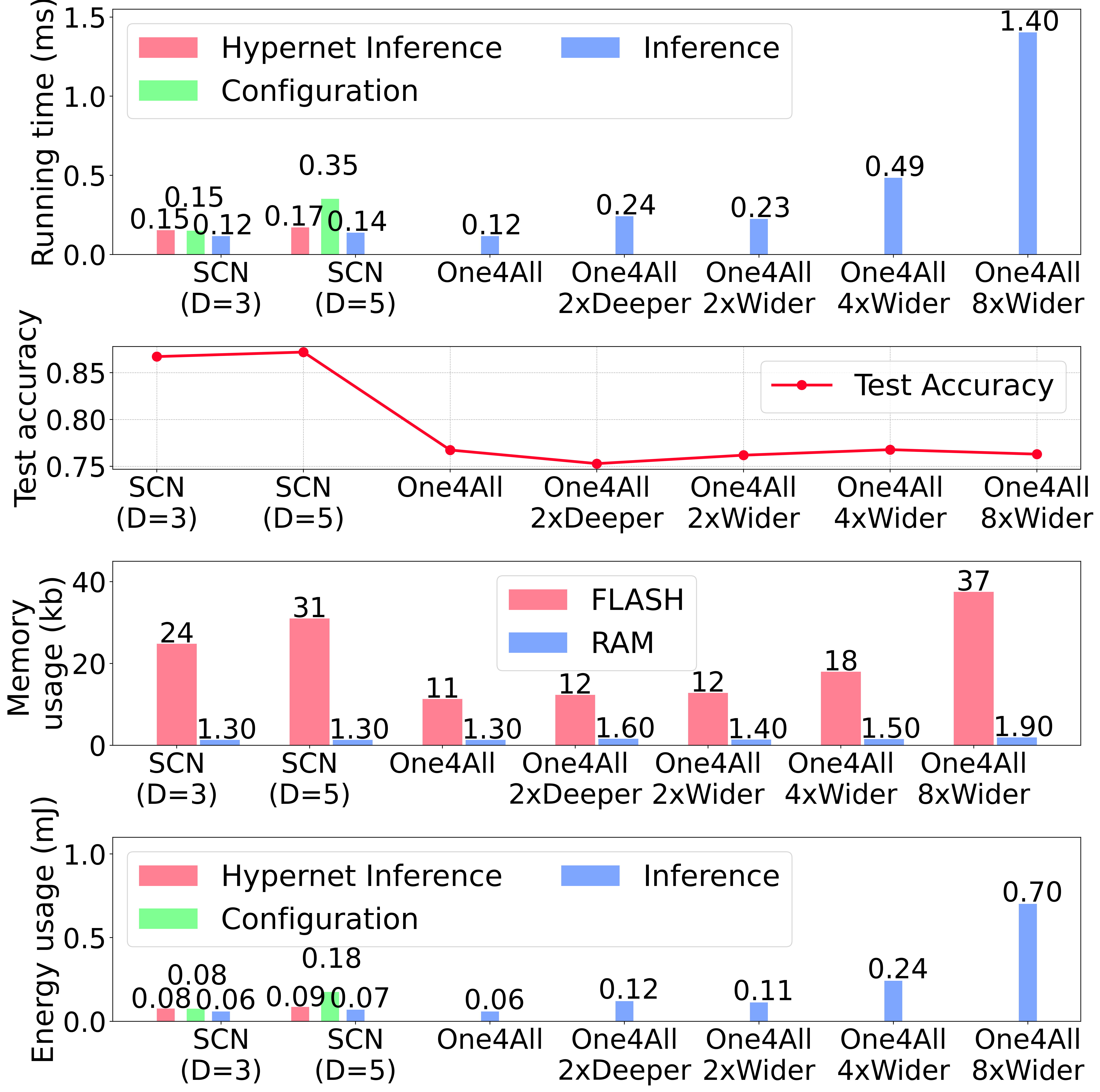

IoT Results

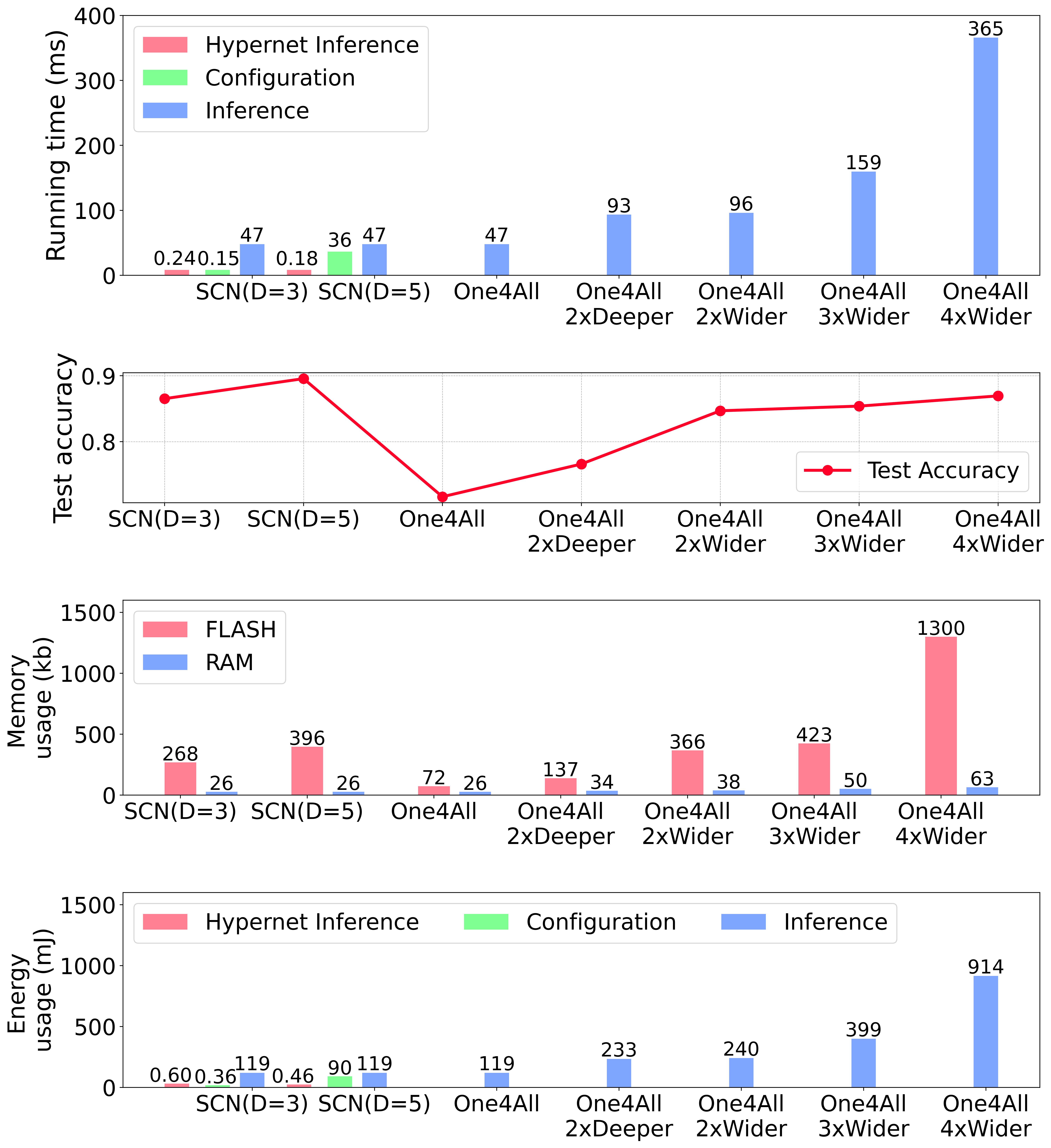

Fruit Classification on Arduino nano 33 BLE

Traffuc Sign Classiffication on ESP32-S3-eye

This paper addresses the problem of model reconfiguration and robustness to input transformations under severe resource constraints. We design subspace-configurable networks (SCNs) that learn low-dimensional configuration subspaces and draw optimal model weights from this structure. We achieve surprisingly high accuracies even for low number of configuration dimensions and observe a simple and intuitive structure of the subspace of optimal solutions for all investigated input transformations.

BibTeX

xxxxxxxxxx61@article{saukh2023representing,2 title={Representing Input Transformations by Low-Dimensional Parameter Subspaces},3 author={Saukh, Olga and Wang, Dong and He, Xiaoxi and Thiele, Lothar},4 journal={arXiv preprint arXiv:2305.13536},5 year={2023}6}